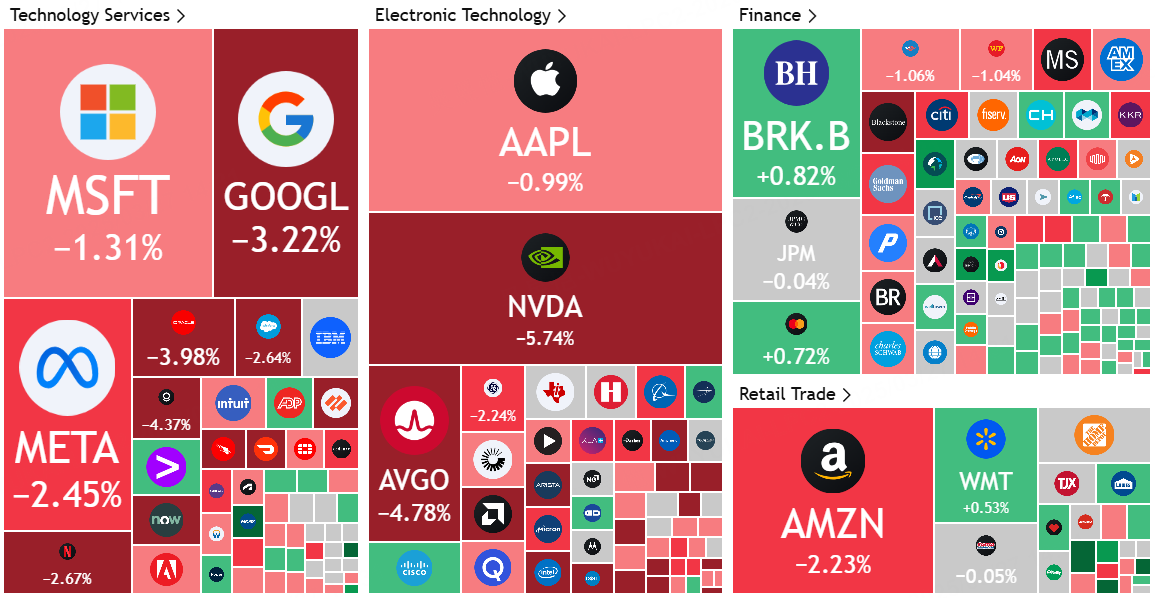

Yesterday the arithmetic chain plunged because of reports of $Microsoft(MSFT)$ data center lease cancellations and extensions, but instead $NVIDIA(NVDA)$ was down more (-5.74%).

NVDA's current but a bit may not be the same, because the arithmetic efficiency improvement and the concern of lower demand for GPUs, in fact, in January DeepSeek wave has been embodied, it makes no sense that the same subject matter once again hype wave.

But NVDA's own Blackwell series may become a more worrying point for the market:

GB200 supply chain challenges, which have been reported on quite a bit before.Included:

Deployment complexity: the deployment of GB200 required 5-7 days of installation time, with frequent instability and system crashes during operation

Hardware Architecture Complexity: There are many different deployment variants involved, each with its own advantages and disadvantages

Configuration complexity: Only NVIDIA's engineers were familiar with the overall setup of the equipment racks, making it difficult for the customer to control on their own

Infrastructure requirements: Many data center infrastructures are unable to meet the GB200's operating environment requirements, such as high power consumption and complex cooling systems, which limits its widespread deployment.

As a result, many cloud service providers (CSPs) have expressed interest in switching to legacy HGX offerings, and GB200 supply chain issues could lead to lower-than-expected revenue growth for NVIDIA

The current conservative market estimate for GB200 shipments in 2025 is approximately 15,000 cabinets

The GB300 development plan is to provide samples for customer testing from Q4 2025 to Q1 2026, with no mass production shipments until 2026. However, there are also reports that NVDA is planning an early release of the GB300 in Q2 2025, which could further impact GB200 sales.

Overall, the market's concerns about NVDA's GB200 could be a significant factor in the short-term impact on NVDA's share price (change in performance over a six-month period).

Comments